Beginning the Debate: Visual SLAM vs Visual Odometry

If you’re developing autonomous systems that require real-time and high-precision navigation without pre-existing maps, Visual SLAM is superior. However, for reliable and robust navigation capabilities in GPS-denied or indoor environments, particularly in UAV missions, opt for Visual Odometry.

Key Differences Between Visual SLAM and Visual Odometry

- Visual SLAM integrates AI and Robotics, offering capabilities beyond pure navigation. Visual Odometry primarily focuses on position determination.

- Visual SLAM involves a more complex process including Feature Extraction, Mapping, and Optimization. Visual Odometry has focus stages like Image Correction and Camera Motion Estimation.

- Visual SLAM market prospects are larger, with estimated worth targeted at $1.2bn by 2027 mostly due to autonomous vehicles. Visual Odometry is critical in GPS-denied environments, particularly for UAVs.

- Visual SLAM primarily uses camera(s) for perception whereas Visual Odometry may also incorporate an Inertial Measurement Unit within its system.

| Comparison | Visual SLAM | Visual Odometry (VO) |

|---|---|---|

| Purpose | Navigating without pre-existing maps or positioning | Determining the position and orientation by analyzing images |

| Use in Autonomous Systems | Yes | Yes |

| Methods | Feature-based like PTAM, ORB-SLAM, LSD-SLAM, DSO, etc. | Feature-based and direct method |

| Processing Stages | Feature Extraction, Feature Tracking, Mapping, Loop Closure, Optimization, and Localization | Acquiring images, Image correction, Feature detection, Feature extraction, and Correlation, Estimation of camera motion |

| Primary Input | Camera(s) | Camera Images |

| Major Challenges | Localization errors and impact of noise | Drift, occlusions, motion blur, rapid camera movements, feature quality and lighting conditions |

| Applications | Robotics, AR/VR, autonomous vehicles, and industrial automation | Autonomous navigation, obstacle avoidance, UAV precise landing in GPS-denied or indoor environments |

| Role in Career Opportunities | Increasing demand in robotics R&D, autonomous vehicle companies, AR/VR startups, and industrial automation | Important for execution of UAV missions in environments with unavailable or interfered GNSS signal or datalink |

What Is Visual SLAM and Who’s It For?

Visual SLAM, a revolutionary technology, synergizes Computer Vision, Robotics, and Artificial Intelligence. It is cardinal for autonomous systems, delivering navigation sans pre-existing maps or external positioning. Conceptualized in the early 1980s, decisive strides in mobile Robotics in the late 1990s accelerated its development unexpectedly. Today, its essence lies in Feature Extraction, Feature Tracking, Mapping, Loop Closure, Optimization, and Localization.

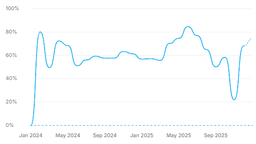

Visual SLAM is targeted toward robotics R&D, autonomous vehicle companies, AR/VR startups, and industrial automation sectors. With the global SLAM market expected to touch $1.2bn by 2027, expertise in Visual SLAM is poised to be a lucrative prospect.

Pros of Visual SLAM

- Equips autonomous systems with advanced navigation capabilities

- Applicable to a diverse gamut of fields, including AR/VR, autonomous vehicles, and industrial automation

- Visual SLAM’s integral role in Computer Vision, AI, and Robotics positions it for a tremendously promising career trajectory

Cons of Visual SLAM

- Selective localization errors can lead to substantial deviations from actual values

- Demands specific camera specifications for optimal functioning, typically requiring a global shutter and grayscale sensor

What Is Visual Odometry and Who’s It For?

Visual Odometry (VO) is the process of discerning a robot’s position and orientation through camera images. First manifesting its utility on Mars Exploration Rovers, VO stands equivalent to conventional odometry, the only difference lies in the source of estimation — camera images.

VO finds application in autonomous navigation, obstacle avoidance, precise landing of UAVs, especially in environments without GPS. With advancements in computer vision, sensor technologies, and machine learning, VO is projected to evolve for even more robust and precise navigational abilities.

Pros of Visual Odometry

- Enables accurate position and orientation estimation in adversary conditions

- Critical for UAV missions, particularly in GPS-denied or indoor environments

- Potential for growth with advances in related technologies

Cons of Visual Odometry

- Accumulated errors or drift over time

- Challenges with rapid camera movements, lighting conditions, and the quality of features

Code Examples for Visual SLAM & Visual Odometry

Visual SLAM with Python and OpenCV

This is a simple example of Visual SLAM coding with Python and OpenCV, particularly useful for those with a basic understanding of Python, computer vision, and libraries such as NumPy and OpenCV.

import cv2

import numpy as np

# Loading key points and descriptors from a given scene

scene = cv2.imread('scene.jpg',0)

sift = cv2.xfeatures2d.SIFT_create()

kp1, des1 = sift.detectAndCompute(scene,None)

# Initializing the FLANN Matcher

FLANN_INDEX_KDTREE = 0

index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5)

search_params = dict(checks=50)

flann = cv2.FlannBasedMatcher(index_params,search_params)

# Function for performing the SLAM

def perform_slam(new_frame):

kp2, des2 = sift.detectAndCompute(new_frame,None)

matches = flann.knnMatch(des1,des2,k=2)

# Ratio test

good = []

for m,n in matches:

if m.distance < 0.7*n.distance:

good.append(m)

return len(good)Visual Odometry using Python and OpenCV

This code snippet performs visual odometry by tracking optical flow in successive images. It assumes basic knowledge of Python and libraries such as OpenCV.

import cv2

import numpy as np

# Function to track optical flow

def track_flow(frame1, frame2):

# Parameters for ShiTomasi corner detection

feature_params = dict( maxCorners = 100, qualityLevel = 0.3, minDistance = 7, blockSize = 7 )

# Parameters for Lucas-Kanade optical flow

lk_params = dict( winSize = (15,15), maxLevel = 2, criteria = (cv2.TERM_CRITERIA_EPS | cv2.TERM_CRITERIA_COUNT, 10, 0.03))

# Find initial feature points

p1 = cv2.goodFeaturesToTrack(frame1, mask = None, **feature_params)

# Perform optical flow tracking

p2, st, err = cv2.calcOpticalFlowPyrLK(frame1, frame2, p1, None, **lk_params)

return p1, p2

# Load two grayscale images

img1 = cv2.imread('frame1.jpg', 0)

img2 = cv2.imread('frame2.jpg', 0)

# Estimate optical flow

p1, p2 = track_flow(img1, img2)Visual SLAM vs Visual Odometry: Which Reigns Supreme?

As we delve into the undiscovered depths of innovation, the unanswered question remains – Visual SLAM or Visual Odometry? Let’s decode this conundrum:

AR/VR Creators

Visual SLAM proves to be the winner with AR/VR creators given its in the field of AR/VR startups. Its capacity to extract visual features from images for map generation provides impressive real-time implementations, pushing the boundaries of perception in AR and VR.

Robotic Developers

If you are a robotic developer, your loyalty may lean towards Visual SLAM, due to its origins in autonomous systems and mobile robotics. Its utilization across robotic R&D injects a futuristic verve into the domain.

Autonomous Vehicle Designers

The growing market that fuels the autonomous vehicle industry calls for a reliable solution. With its proven expertise in the autonomous vehicle domain, Visual SLAM holds the key to your autonomous aspirations.

Industrial Automation Engineers

As an Industrial Automation Engineer, you might find the reduced localization errors in Visual SLAM, due to Kalman filters, to be an efficient solution for automation projects.

UAV Developers

For UAV developers who battle GPS-denied or indoor environments, Visual Odometry, with its high position and relatability accuracy, stands as a beacon of innovation to navigate your UAV missions to success.

If you are looking to chart the future of tech with a stellar navigation sensing tool, Visual SLAM’s real-time mapping strikes a chord. However, with the precise motion tracking, Visual Odometry finds its victory in UAV navigation. Amidst this tech tug-of-war, the winner resonates with your innovation’s heartbeat.