Comparing Giants: Visual SLAM vs LiDAR SLAM – What You Need to Know

For precise, reliable mapping particularly in challenging environments, choose LiDAR SLAM. It outperforms in mapping accuracy and georeferencing of data. However, if constraints like cost and easy availability of sensors matter, Visual SLAM, leveraging camera-driven data, is your go-to.

Key Differences Between Visual SLAM and LiDAR SLAM

- Visual SLAM uses camera input for mapping while LiDAR SLAM employs lasers to measure distances.

- Geospatial giant, GeoSLAM, commercialized LiDAR SLAM whereas Visual SLAM originated from Robotics research.

- LiDAR SLAM tolerates physically challenging environments whereas Visual SLAM is more prone to localization errors.

- While Visual SLAM demands specific camera specifications, LiDAR SLAM allows optimization tailored to the capturing environment.

| Comparison | Visual SLAM | LiDAR SLAM |

|---|---|---|

| Creation Period | 1980s | 2008 |

| Real-time Capabilities | Yes (PTAM algorithm) | Yes (Continuous-time SLAM) |

| Applications | Robotics, AR, VR, autonomous vehicles, industrial automation | Robotics, autonomous vehicles, drone delivery, home robot vacuums, warehouse mobile robot fleets |

| Principal Sensing Method | Camera visuals | LiDAR (light detection and ranging) |

| Popular Implementations | ORB-SLAM, LSD-SLAM, DSO | GeoSLAM |

| Optimization | Use of Kalman filters to reduce noise and uncertainty | Signal processing and pose-graph |

| Details | Award-winning technology, additional semantics enhances level of autonomy | Easily georeferenced data, outperforms others in accuracy, more efficient than GPS in certain environments |

What Is Visual SLAM and Who’s It For?

Visual SLAM, or Simultaneous Localization and Mapping, is an avant-garde technology that marries computer vision, AI, and robotics. It allows autonomous systems to navigate without prior maps or external location data. Mutating from research in the early 1980s, this technology has powered surprising growth in mobile robotics since the late 1990s. Today’s more advanced algorithms have enabled it adaptation for diverse applications, ranging from AR/VR to industrial automation.

It’s a prospective space for tech professionals, businesses related to robotics R&D, autonomous vehicles, AR/VR developers and industrial automation. The global SLAM market is forecasted to rise to $1.2bn by 2027, underlining its potential vitality.

Pros of Visual SLAM

- Supports autonomous navigation without pre-existing maps.

- Has a wide range of applications, from VR/AR to robotics, autonomous vehicles, and industrial automation.

- Offers promising career prospects and growing market value.

- Uses visual features for navigation, reducing reliance on hardware.

Cons of Visual SLAM

- Accumulated localization errors could lead to deviations from actual values.

- Requires global shutter camera with specific resolution for optimal functioning.

- Real-time implementation comparably challenging.

What Is LiDAR SLAM and Who’s It For?

LiDAR SLAM, birthed by Australia’s national science agency, CSIRO, in 2008, morphed from mere image-based orientation to continuous-time SLAM, imperative for laser scanning. GeoSLAM, products of a collaboration with 3D Laser Mapping, champion this technology. The innovatively engineered GeoSLAM Beam and Connect offer bespoke SLAM solutions adaptable to any capturing environment.

Diverse sectors will find valuable use in LiDAR SLAM. From autonomous vehicle manufacturers through to those developing robots for home use and warehouse logistics. Automated guided vehicles (AGVs) and autonomous mobile robots (AMRs) have projected growth up to $18B by 2027, showcasing the potential of the technology.

Pros of LiDAR SLAM

- Efficient intra-map localization and map creation.

- Superior mapping accuracy, especially when using the Velodyne VLP-16 sensor.

- Provides more reliable data than GPS systems in obstructive environments.

- Useful for home robot vacuums, mobile robot fleets, autonomous cars, and drones.

Cons of LiDAR SLAM

- Maps may need to be redrawn when significant changes occur in the environment.

- Relies on frequent software updates for improved performance.

- Dependent on laser light detection, posing challenges in certain environments.

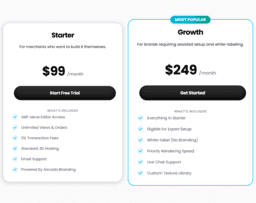

Visual SLAM vs LiDAR SLAM: Pricing

In comparison, Visual SLAM relies on low-cost hardware like cameras, while LiDAR SLAM architectures can hike up the costs due to specialized hardware demands.

Visual SLAM

Visual SLAM largely utilizes camera sensors, typically featuring VGA resolution (640×480 pixels). These cameras, while effective in perceiving the surrounding environment, are relatively affordable and widely available. The current researching and development expenditure is mainly attributed to algorithm development and software optimization. The increasing demand in domains such as autonomous vehicles and AR/VR startups are contributing to the overall market growth, which is anticipated to soar from $245.1 million in 2021 to $1.2 billion by 2027 according to BCC Research.

LiDAR SLAM

LiDAR SLAM, compared to Visual SLAM, usually necessitates the acquisition of high-precision laser scanners, a piece of hardware that can vastly escalate the costs. Additionally, optimizing the performance of these systems often demands frequent software updates, which could also bear associated costs. Financial growth in the relevant robotics sector is strong as evident from the projection of up to $18 billion by 2027 on Automated Guided Vehicles (AGVs) and Autonomous Mobile Robots (AMRs) featuring SLAM technology. Nonetheless, the overall cost attributed to LiDAR SLAM in these sectors would be substantially higher considering the equipment and continuous maintenance requirements.

Code Examples for Visual SLAM & LiDAR SLAM

Visual SLAM

This Visual SLAM example utilizes OpenCV and ORB (Oriented FAST and Rotated BRIEF) features for visual odometry. It is a Python snippet focusing on the central aspect of Visual SLAM, Feature Tracking. Prerequisites include Python, OpenCV and numpy libraries.

import numpy as np

import cv2

cap = cv2.VideoCapture('path_to_video')

ret, frame1 = cap.read()

prvs = cv2.cvtColor(frame1,cv2.COLOR_BGR2GRAY)

hsv = np.zeros_like(frame1)

hsv[...,1] = 255

while(1):

ret, frame2 = cap.read()

next = cv2.cvtColor(frame2,cv2.COLOR_BGR2GRAY)

flow = cv2.calcOpticalFlowFarneback(prvs,next, None, 0.5, 3, 15, 3, 5, 1.2, 0)

mag, ang = cv2.cartToPolar(flow[...,0], flow[...,1])

hsv[...,0] = ang*180/np.pi/2

hsv[...,2] = cv2.normalize(mag,None,0,255,cv2.NORM_MINMAX)

rgb = cv2.cvtColor(hsv,cv2.COLOR_HSV2BGR)

cv2.imshow('frame2',rgb)

k = cv2.waitKey(30) & 0xff

if k == 27:

break

elif k == ord('s'):

cv2.imwrite('opticalfb.png',frame2)

cv2.imwrite('opticalhsv.png',rgb)

prvs = next

cap.release()

cv2.destroyAllWindows() LiDAR SLAM

This example implements a simple LiDAR SLAM example using Python and the ICP (Iterative Closest Point) algorithm from PCL(Python PCL). This process is foundational to LiDAR SLAM. You’ll need Python, PCL and numpy libraries installed.

import pcl

import numpy as np

def do_icp(source_cloud, target_cloud, max_iteration, threshold):

icp = source_cloud.make_IterativeClosestPoint()

icp.set_MaxCorrespondenceDistance(5.0)

icp.setMaximumIterations(max_iteration)

icp.set_TransformationEpsilon(1e-10)

icp.set_EuclideanFitnessEpsilon(threshold)

icp.setInputSource(source_cloud)

icp.setInputTarget(target_cloud)

convergence, transf, estimate, fitness = icp.icp()

return convergence, transf, estimate, fitness

def convert_array_to_point_cloud(arr):

cloud = pcl.PointCloud()

cloud.from_array(arr.astype(np.float32))

return cloud

source_arr = #insert numpy array here.

target_arr = #insert numpy array here.

source_cloud = convert_array_to_point_cloud(source_arr)

target_cloud = convert_array_to_point_cloud(target_arr)

con, transf, est, fit = do_icp(source_cloud, target_cloud, 100, 0.001)

print('ICP Convergence:',con)

print('Transformation Matrix:',transf)

print('Estimate:',est)

print('Fitness:',fit)Visual SLAM or LiDAR SLAM: Which Gets the Checkered Flag?

Let’s speed down the verdict lane, examining which technology, Visual SLAM or LiDAR SLAM, leads in different user scenarios.

Tech Researchers and Developers

The ultimate spearhead to navigate unmapped terrain, Visual SLAM aligns well with researchers and developers. It not only offers the advantage of expertise in a rapidly evolving field, but also beckons with promising career prospects. Plus, it’s linked to the burgeoning global SLAM market expected to zoom from $245.1m in 2021 to $1.2bn by 2027.

AR/VR Creatives and Game Makers

Brush off the confetti: Visual SLAM is the winner for AR/VR creatives and game makers. The tech radically enhances user experiences with seamless integration of AI, Robotics, and Computer Vision. Besides, being part of breakthrough innovations like ORB-SLAM and LSD-SLAM can be a badge of honor in the tech community.

Industrial Automation and Autonomous Vehicle Engineers

LiDAR SLAM makes a robust case for itself in industrial automation and autonomous vehicle manufacture. Its advanced sensor and signal processing, combined with GeoSLAM Beam’s customized SLAM processing, make it highly reliable. Plus, it outperforms other SLAM systems in mapping accuracy – no small feat in space-critical domains.

Product Owners in Robotics

LiDAR SLAM wins the robotics leg, with its advanced semantics addition to SLAM, enabling high-level autonomy. Backed by a predicted surge of $18B by 2027 in AGVs and AMRs, SLAM’s device performance improvements via updates further enhance its appeal.

In the dueling arena of Visual SLAM vs. LiDAR SLAM, Visual SLAM captures the flag for tech researchers, developers, and AR/VR creatives with its rapid evolution and rich application spectrum. Yet, LiDAR SLAM rivals close behind, stealing the laurels in robotics and autonomous vehicles with its reliable accuracy and software update benefits.